|

|

|

|

|

|

|

|

|

|

| Experimental design for optimizing black-box functions is a fundamental problem in many science and engineering fields. In this problem, sample efficiency is crucial due to the time, money, and safety costs of real-world design evaluations. Existing approaches either rely on active data collection or access to large, labeled datasets of past experiments, making them impractical in many real-world scenarios. In this work, we address the more challenging yet realistic setting of few-shot experimental design, where only a few labeled data points of input designs and their corresponding values are available. We introduce Experiment Pretrained Transformers (ExPT), a foundation model for few-shot experimental design that combines unsupervised learning and in-context pretraining. In ExPT, we only assume knowledge of a finite collection of unlabelled data points from the input domain and pretrain a transformer neural network to optimize diverse synthetic functions defined over this domain. Unsupervised pretraining allows ExPT to adapt to any design task at test time in an in-context fashion by conditioning on a few labeled data points from the target task and generating the candidate optima. We evaluate ExPT on few-shot experimental design in challenging domains and demonstrate its superior generality and performance compared to existing methods. |

|

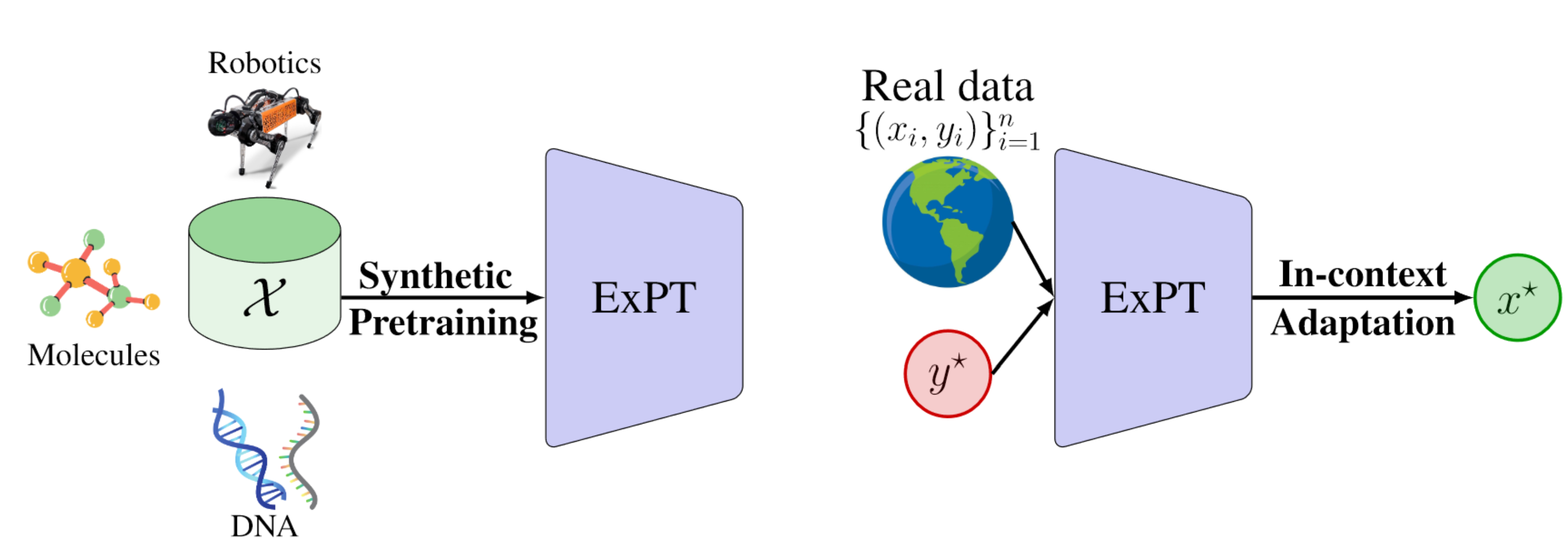

| ExPT follows a pretraining-adaptation approach in order to create and adapt a a foundation model for experimental design. In the pretraining phase, the model is given a large unlabeled dataset. The domain of the dataset can range from robot designs to DNA sequences. This unlabeled dataset is then used to create a synthetic dataset using a novel approach that uses Gaussian Processes to scale up the pretraining. During adaptation, the model is given a few real-world designs and their corresponding values and is able to produce new and high quality designs. |

|

| ExPT has an encoder-decoder architecture. A transformer-based encoder takes in context points (y1,x1), ... (ym, xm) as well as target y values ym+1, ... , yN to produce embeddings hm+1, ... , hN. Then a VAE decoder generates designs xm+1, ... , xN corresponding to the target objective values. |

|

|

|

|

|

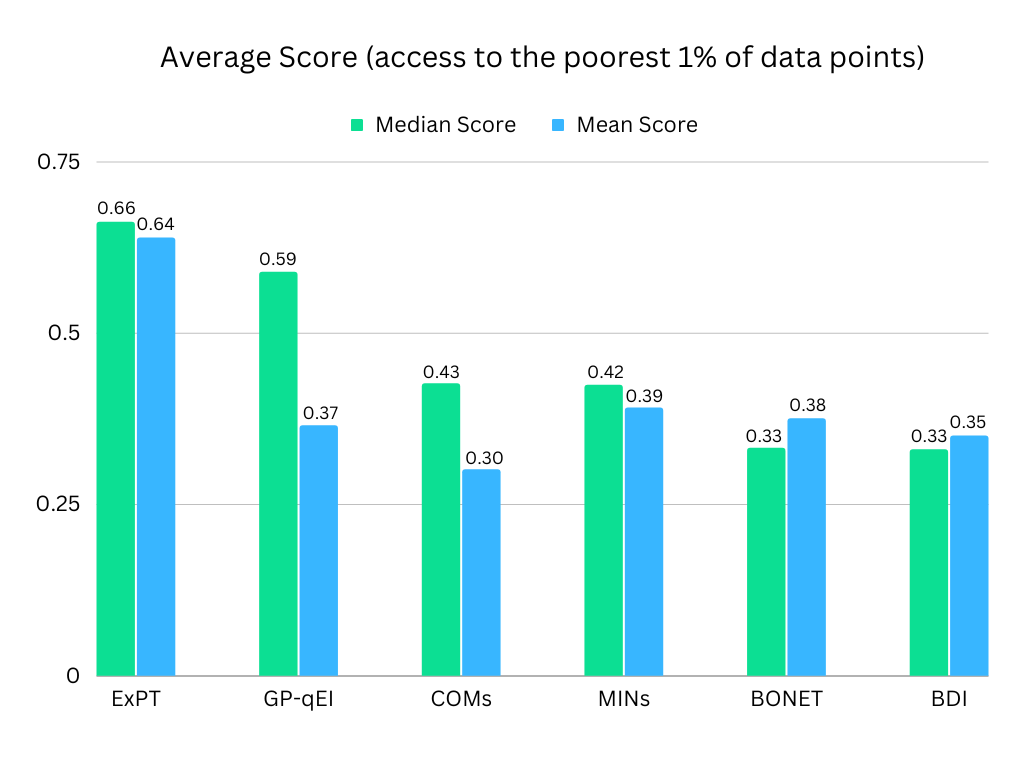

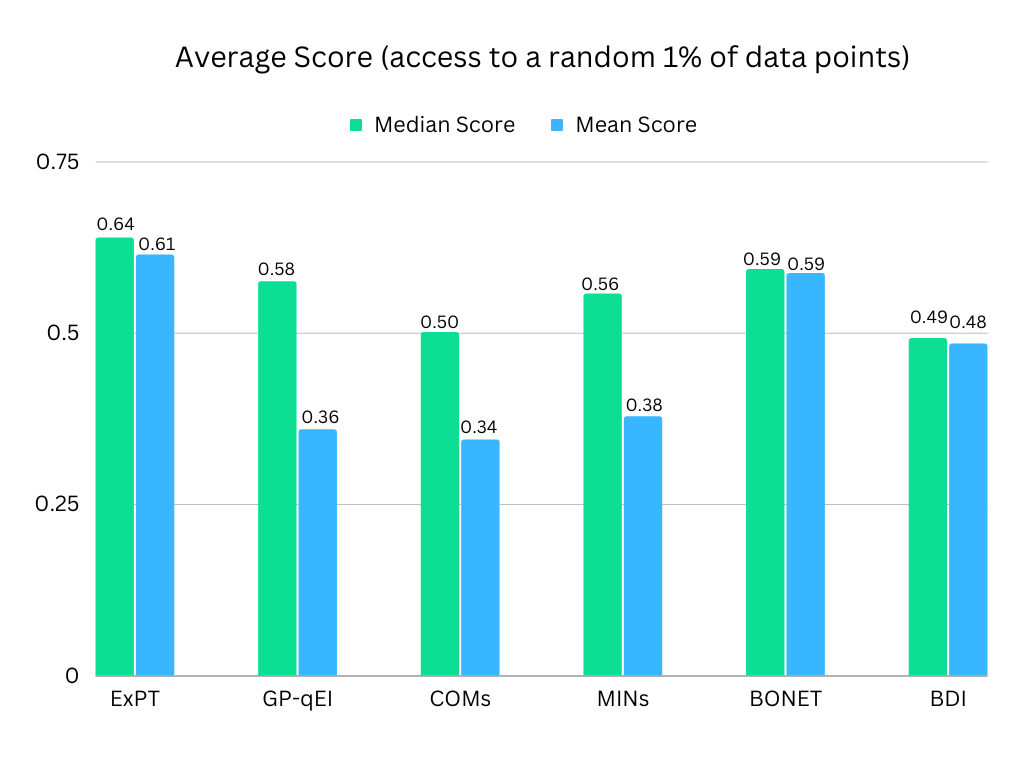

| 4 few-shot tasks are constructed using data from the Design-Bench benchmark. These are D'Kitty and Ant from the domain of robotics, and TFBind-8 and TFBind-10 from the domain of genetics. We consider two different scenarios consisting of 1% of the total available data. Both scenarios are few-shot settings and demonstrate the performance of the model even when the designs available are not high performing. |

| The models are given access to the 1% worst-performing datapoints. |

|

| The models are given access to a random 1% subset of the datapoints. |

|

| Further details about these experiments as well as other experimental setups, such as synthetic tasks, multi-objective tasks, and ablation studies comparing forward and inverse modeling can be found in Section 3 of the paper. |

|

|